Enhancing Ad Review with Agentic AI Continuing the Conversation

OVERVIEW

- SUMMARY

A webinar by Red Oak, focusing on the use of Agentic Artificial Intelligence (AI) to enhance ad review and compliance within the financial services industry. The discussion features insights from a Red Oak advisor, Rob Dearman, who addresses the challenges of AI implementation and "AI washing" while emphasizing the importance of organizational readiness and quality data. Additionally, Red Oak co-founder Rick Grashel explains their firm's approach, highlighting a shift away from extensive pre-training toward a system using classifications, prompts, and rules controlled by business owners. Finally, Cassie Beswick, a client from John Hancock, shares her company's successful journey implementing the Red Oak AI tool, noting the importance of customization and efficiency for global compliance review.

CRITICAL QUESTIONS POWERED BY RED OAK

What is the biggest challenge firms face when trying to adopt AI for compliance?

The primary barriers are not technology or regulation but organizational readiness. Many firms get stuck in “pilot purgatory,” endlessly testing tools without deploying them. Data quality, lack of strategy, and change management are the key hurdles. Successful adoption requires clean, AI-ready data, clear governance, and a plan for integrating AI into existing workflows.

How does Red Oak’s approach to AI in ad review differ from other solutions?

Unlike older models that require heavy pre-training and IT intervention, Red Oak puts control directly into the hands of compliance teams. Business owners create classifications, prompts, and rules — eliminating the need for complex retraining or dependence on engineers. This makes implementation faster, more adaptable across jurisdictions, and easier to maintain over time.

What tangible benefits have clients seen from implementing AI in compliance review?

Clients like Manulife John Hancock report fewer review cycles (“round trips”), improved efficiency, and more consistent findings across global teams. AI handles common issues (like outdated sources or missing disclosures), freeing human reviewers to focus on nuanced judgments. The result is faster approvals, reduced operational risk, and stronger compliance oversight.

TRANSCRIPT (FOR THE ROBOTS)

Jamey Heinze To everybody, welcome to the latest in Red Oak’s series of thought leadership webinars.

Jamey Heinze To everybody, welcome to the latest in Red Oak’s series of thought leadership webinars.

Today we are not really reprising. We’re doing a follow-up session on AD Review using artificial intelligence. In this case, it’s enhancing AD Review with Agentic AI, and we’re continuing the conversation that we started at the end of last year when we began including artificial intelligence in the Red Oak AD Review platform.

We did a webinar back in January, and so this is a follow-up from there. Welcome. We’ve got a lot to cover today and some incredible speakers to cover the material.

So, first up, we will cover AI in compliance from Hype to Impact. And we have Rob Dearman from DCG Insight. I’ll give you a little more info on Rob here in a second.

We then have Rick Grashel, who is a Red Oak founder, and he will talk about Red Oak’s AI point of view and lessons that we’ve learned since our last webinar back in January.

And then, to really give you what may be the most interesting perspective, we have Cassie Beswick from Manulife John Hancock. She is a Red Oak AI AD Review client, so she will take you through her perspective on that.

Okay, housekeeping-wise, before we start, I want to make sure that we keep this as engaging and authentic as we can. So please ask questions. On your screen, you can see where I’ve illustrated with the arrow: there’s a Q&A button. Sometimes that Q&A button lives in the “More” section, so it may look slightly different on your screen, but the bottom line is — please click that and ask questions.

The way we’ll address questions is: the speakers, the subject matter experts, will answer them in real time by typing out the answers on screen. However, for some — if they’re bigger questions or if multiple people need to answer — we’ll save them to the end. So it’ll be a mix, but everybody will be able to see them.

If there are too many questions at the end that we can’t answer, we will capture every single one and follow up with you so you have all the information you need.

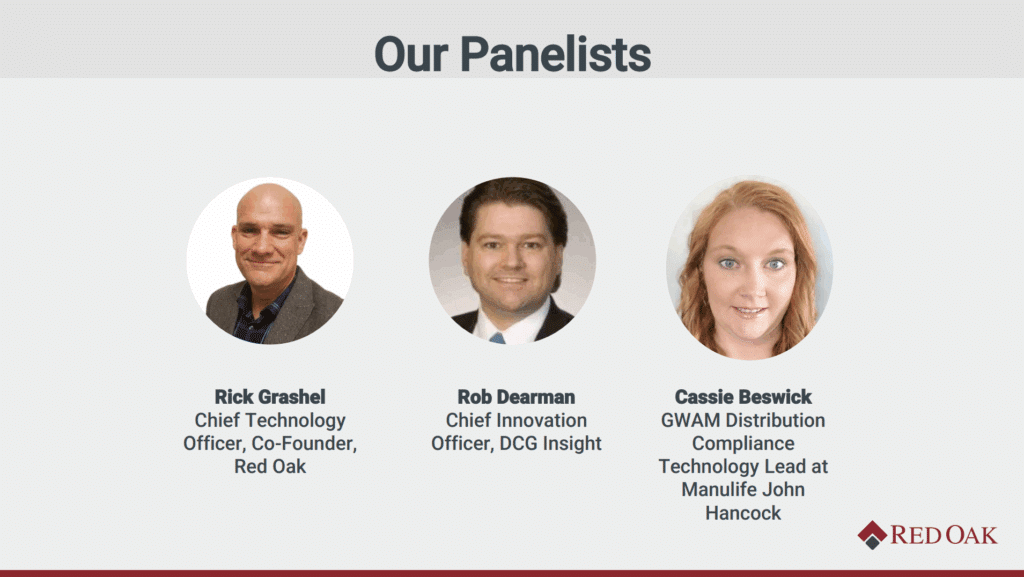

Okay, our panelists. I’m going to start on the right side of my screen and talk about Cassie. As I mentioned, she’s with Manulife John Hancock. She is a GWAM of Distribution Compliance, the lead — and I forgot to ask her what GWAM stands for, so I hope she covers that for us when it’s her turn to speak.

I can tell you she’s responsible for implementing and integrating tools and systems to improve scalability, efficiency, and responsiveness to regulatory developments. She’s responsible for reviewing and approving advertising materials and social media monitoring.

She’s been with John Hancock since 2015, has a tremendous amount of industry experience, and perhaps most importantly, she lives in New Hampshire with her husband Colin, their son Calum, and their rescue dog Pete. So that’s Cassie. We’re excited to hear her perspectives.

Rob Dearman has an extremely long bio, so I’m going to just hit the high spots. Many of you know Rob. He has participated in events with us before. He is a Red Oak advisor, and we’re very lucky to have him. He’s got more than 30 years of industry experience. He started and ran the third-largest broker-dealer. He does strategy consulting for broker-dealers, RIAs, and fintech companies.

Lately, he’s been doing a tremendous amount of work in his consultancy around the applicability of artificial intelligence for our industry. He’s seen it with multiple providers and in multiple companies, so his insights are really interesting and important.

And then we move to Rick Grashel. Rick is, as I mentioned, a co-founder of Red Oak. He’s been with the company since the outset and he has built all of our technology, including layering artificial intelligence into our AD Review tool. So he’s got a really interesting perspective from the inside out that he will share with you.

So we’re going to start this off with a poll. The poll is pretty basic: How ready do you think artificial intelligence is for use in financial services compliance workflows?

You’ll see a poll pop up on your screen. We’ll give it a second here. The answers are:

- Not ready at all

- Still early, making progress

- Ready with the right controls

- More than ready

- Not sure

We’ll give you about 10 more seconds or so, and this will be interesting just to set the stage because I’m going to pass this over to Rob here in a second. He has a very broad perspective across financial services.

Okay, let’s see the answers. All right. Only one person out of 70 said it’s not ready at all. And I think we landed in an interesting spot — “making progress,” kind of the middle answer, is the leader. But “ready with the right controls” is there too.

So at this point, I’m going to close the poll and pass this over to Rob Dearman. And if you have any thoughts on the poll, take it away, sir.

Rob Dearman

That’s awesome. You’re going to see in a second — I agree. Eighty-one percent of you said there are aspects of AI that could be used, with a whole bunch of caveats. I think you’re spot on based on what I’m doing.

I’ve worked with RIAs, broker-dealers, including some very large ones that rhyme with words like “Forgot Manley,” to help them evaluate AI and recover, in many cases, from failed projects — a lot of in-house build failures, some vendor failures.

My role today is to set the stage for this conversation. What I really love to do is cut through the hype to highlight what’s real, what isn’t, and where we’re headed.

The reason that I consult for Red Oak is I put them in that second category. They don’t talk about things unless they’re real. They don’t sell vaporware. That’s why I have no reservations in putting that Red Oak logo over the top of my left ear.

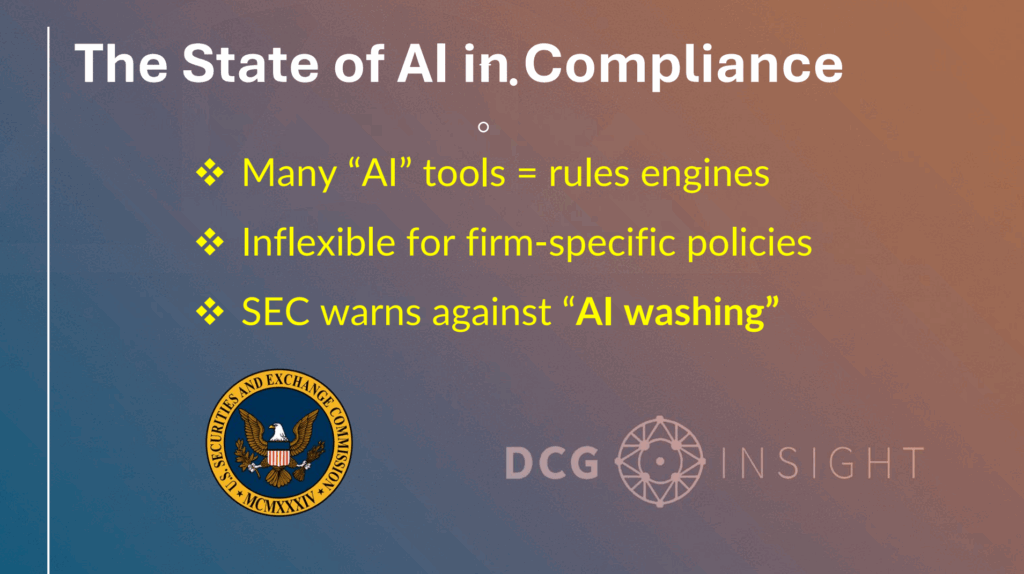

Moving forward here: the current state. Unfortunately, many of the so-called AI and machine learning tools were actually rules engines in disguise. Behind the scenes, a lot of if-then statements and hard coding. They broke down when firms showed up with their WSPs and ADVs and oversight procedures, expecting AI or ML to adapt, change, and generate.

Then they found out a couple of things: their data wasn’t ready, the rules were hard coded, and the AI technologists didn’t really understand their business that well at all.

So the SEC actually reacted to wave one of AI in fintech. Chair Gensler invented this term called “AI washing.” Earlier last year — March of 2024 — they fined two firms for overstating their use of AI. Regulators are watching closely.

They made it crystal clear: misleading AI claims are a compliance violation. Delphia and Global Predictions were those firms. Combined penalties: $400k. The direct quote in the press release was: “Investment advisers should not mislead the public by saying they are using an AI model when they are not. Such AI washing hurts investors.”

So for compliance officers like you, this is more than hype — it’s a real regulatory risk. The lesson from this: don’t overstate capabilities. Deliver substance, not slogans.

But here’s the hard part. How can you tell the difference? You have to do diligence when your reps show up with the latest, greatest tool. They’re on fire for you to approve it. How do you tell the difference between real AI and machine learning, and something that’s being marketed or demoed as that, but really isn’t?

This is hard. This is harder than just evaluating the latest version of MoneyGuide Pro, eMoney, Riskalyze, or Asset-Map. It’s more like the generation of robo-advisors — only 10% of which still exist today.

Cassie Beswick

Right.

Rob Dearman

The first wave of robo-advisors — we didn’t know what it was. Regulators later had to catch up, and they had to come up with regulation to say, “Hey, you’ve got to publish your algorithms.”

If you’re going to put 30% in cash, Schwab, you’ve got to tell us. If you’re going to push people into proprietary products, you’ve got to tell us.

Because regulators went through that once, they’re now once bitten, twice shy. They’re not going to get fooled again. They are on alert right now — the antenna are up.

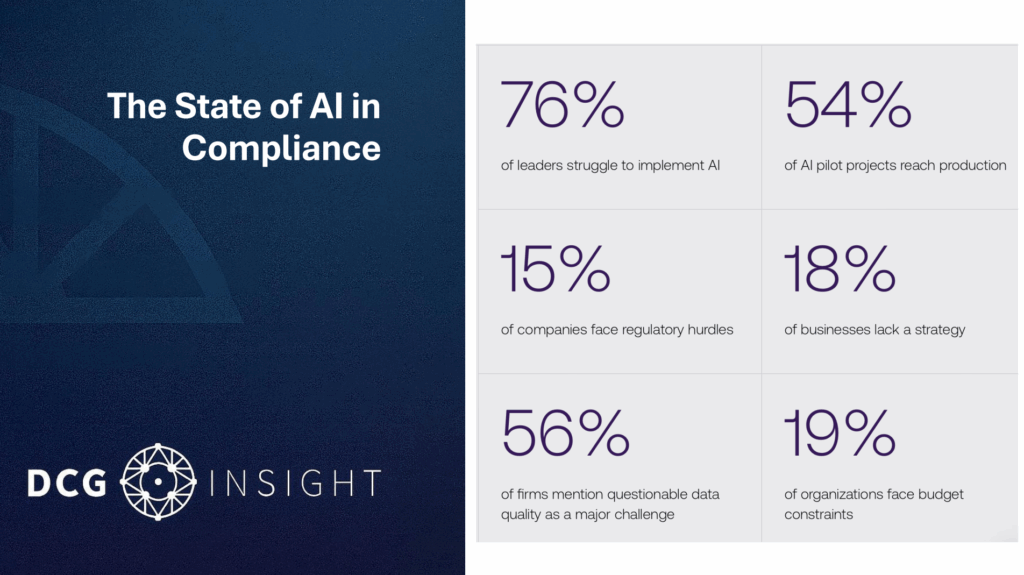

So, let’s go to the next one: the state of AI. These numbers tell the brutal truth about AI implementation in compliance today.

The most revealing statistic: 76% of leaders struggle to implement AI. This is from Vention Teams. (V-E-N-T-I-O-N teams — you can find the data at ventionteams.com.)

That’s three out of four compliance executives who know they probably need something in AI, but can’t figure out how to make it work inside their organization.

Even more telling: only 54% of AI pilot projects ever even make it out of the door to production. Nearly half of initiatives firms invest in never deliver value. They get stuck in endless testing, or fail when they hit real-world complexity, or choke on “dirty gas” in the engine — that is, bad data.

One of my favorite questions to ask new clients who want to build sexy AI dashboards: “Is your data AI-ready?”

Rick Grashel

Huh?

Rob Dearman

What does that mean? Let me ask you the same thing: Is your data AI-ready?

Have you ever gotten questions from reps about performance, billing, or missing transactions? Ever had trade blotter issues with FINRA or the SEC?

If the answer is yes, then you’ve got to circle back and rebuild some foundation before you can do the “sexy” stuff with AI.

Now, here’s where it gets interesting. Only 15% of companies face significant regulatory hurdles as their main challenge with AI. It’s actually not the biggest barrier.

The real problems are organizational:

- 18% lack a strategy. They’re experimenting without a clear plan.

- 56% struggle with data quality. AI is only as good as the data it’s fed.

- 19% face budget constraints.

The pattern is clear: AI implementation failure isn’t about tech limitations or regulatory roadblocks. It’s about fundamental organizational readiness, strategy, data quality, and change management.

The firms succeeding with AI in compliance aren’t necessarily the ones with the biggest budgets or the flashiest tech. They’re the ones who’ve done the foundational work first.

Rob Dearman

Let’s go to the next slide. I’m really excited to share this one with you. This just came out less than 24 hours ago.

I work with Michael Kitces sometimes on his surveys and reports. This is from the 2025 Kitces Advisor Tech Study. The full report will be released during Michael’s talk at Future Proof next month. But you — this audience — are the very first to see this sneak peek.

And the data is fascinating.

First: Document review is clearly the “gateway drug” for advisor AI adoption. It’s leading every category because it’s low risk and has obvious ROI.

Notice — even the skeptics aren’t at zero. 8% of AI skeptics are using AI for documents and client service. When the value proposition is undeniable, adoption happens, no matter their attitude.

But here’s the key insight: advisors are mostly using AI for operational efficiency — document review, planning prep, client service. They’re barely touching strategic innovation. Only 4% are developing new service offerings with AI.

So, the real opportunity is with the “AI moderates” — the persuadable middle. They’re consistently trying AI across categories, and they’ll drive mainstream adoption.

Bottom line: we’re seeing rational, risk-averse adoption. The firms that move beyond efficiency into true innovation will create the biggest competitive advantages.

Rob Dearman

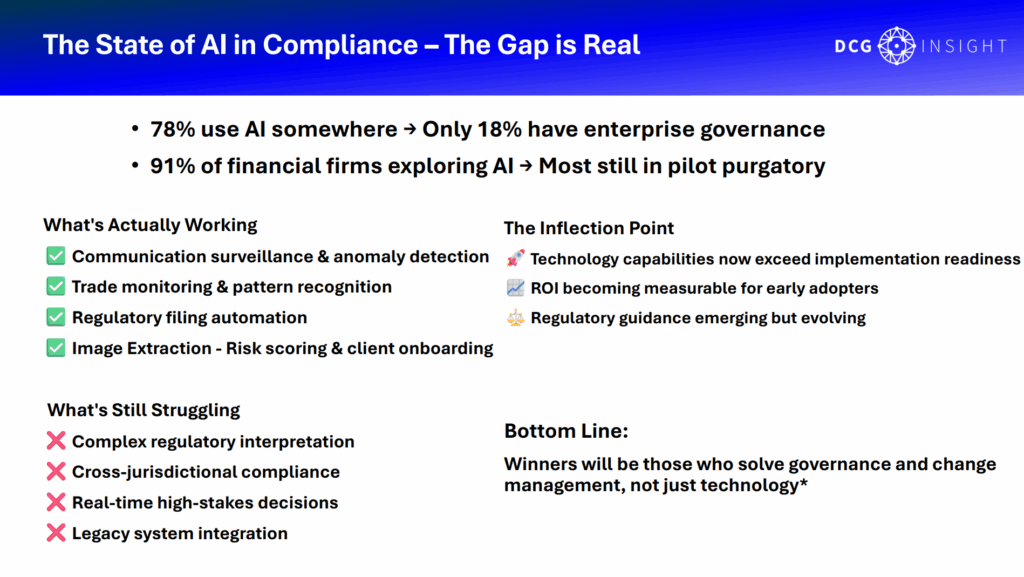

Now let me cut through the hype with some hard data.

While 78% of organizations claim they’re using AI somewhere, only 18% have proper enterprise governance in place.

In financial services specifically, 91% of firms say they’re exploring AI — but most are stuck in what I call pilot purgatory.

Anybody there? Bueller? Bueller? Pilot purgatory is endless testing without ever getting to production.

So, what’s actually working? There are four areas worth calling out:

- Communication surveillance and anomaly detection. AI catches patterns human reviewers miss. You see the same thing in cancer research, where AI detects anomalies earlier than oncologists.

- Trade monitoring. We’re seeing real improvements in both speed and accuracy.

- Regulatory filing automation. AI handles mundane but critical work effectively.

- Risk scoring and client onboarding. Tools like FP Alpha or AI-powered proposal software can pre-populate onboarding from something as simple as a client statement photo.

But let’s be equally honest about what’s broken:

- AI struggles with complex regulatory interpretations and nuanced judgment calls.

- Cross-jurisdictional compliance is still a nightmare. AI can’t handle differences across regions in real time.

- High-stakes, real-time decisions and legacy system integration remain major pain points.

Here’s the critical insight: we’ve hit an inflection point. Tech capabilities now exceed organizational readiness. The limiting factor isn’t the models or even regulation — it’s organizational readiness.

ROI is becoming measurable for early adopters. Regulatory guidance is emerging. But the winners will be the firms that solve governance and change management, not just those that buy better tech.

Rob Dearman

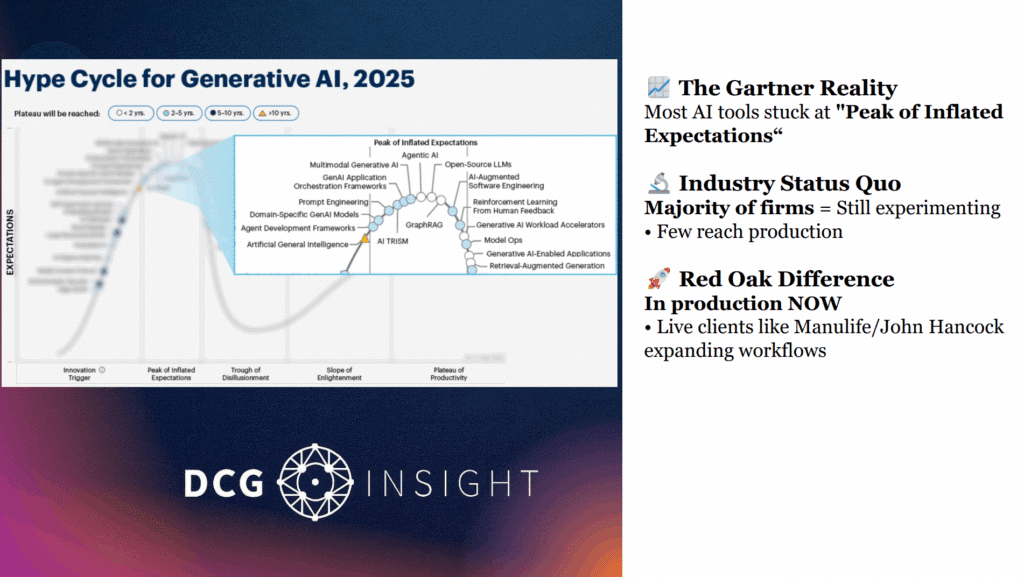

So, if you’ve seen the Gartner Hype Cycle, you’ll recognize this pattern.

Look at where we are in 2025. Almost all AI tools — including agentic AI — are stuck at the peak of inflated expectations.

This is where marketing promises are huge, but real-world delivery falls short.

Vendors make bold claims. But when you dig deeper:

- Pilot programs don’t scale.

- Demos aren’t using real data.

- Implementations get stuck in testing.

The reality? Most firms are still experimenting, running pilots, evaluating vendors.

And here’s the question: how are you doing that? Have you updated your due diligence to evaluate AI properly? Are you running pilots on real data?

At DCG Insight, when clients ask me for a bake-off — “Who are the top three AI vendors we should test?” — Red Oak is always on that list.

Why? Because they’re different. They’re not just running pilots. They’re already in production. They’ve broken ankles and stepped in potholes. They’ve planted their flag. They’re expanding workflows. They’re live.

Now, let’s talk about what’s really exciting: agentic AI.

This isn’t just another chatbot.

ChatGPT waits for you to ask it something. Agentic AI reasons through complex scenarios, applies your rules, and takes the next step automatically. It doesn’t just wait for prompts — it actively works through edge cases using your firm’s protocols.

That’s a game-changer. Imagine if every compliance review were done with the consistency of your best compliance officer — without fatigue, bias, or errors.

Agentic AI doesn’t just flag issues. It applies your risk frameworks, considers context, and can even initiate follow-ups.

That’s why I’m so excited to stop talking and hand it over to Cassie and Rick — the real experts here — to show you what implementation actually looks like.

Thanks, Jamey.

Jamey Heinze

Rob, thank you very much. That was a tremendous amount of information in a very short amount of time. So again, thank you.

We’re going to ask our second poll question now — and this one is specific to your firm, not AI technology in general.

Where is your firm currently with AI adoption in compliance workflows?

Let’s pop that up. The answer choices are:

- We’re just exploring options.

- We’re piloting tools.

- We’re actively using AI in workflows.

- We’re expanding because we’ve seen early success.

- We have no plans yet.

- Not sure.

Okay, we’ll give that another few seconds… All right, let’s see the answers.

As expected, most are still in the early stages, piloting tools — just like Rob suggested a moment ago. But we do have some firms actively using AI, and we know we’re about to hear from Cassie about her firm’s experience. Perfect.

I’m going to close this poll and hand it over to Rick now so he can walk us through Red Oak’s point of view. Rick, you should have control of the slides.

Rick Grashel

Okay, thanks, Jamey. And thanks, Rob — awesome stuff.

And thanks to everyone for the feedback in the polls. It’s clear that people are at different stages in this journey, but you don’t need to be super technical to understand the challenges coming.

Just last week I saw an Economist article saying AI is entering the “trough of disillusionment.” From executives to individual contributors, people are realizing the hype is huge, but the returns haven’t matched. Firms are throwing millions of dollars and countless hours into initiatives, but they’re not seeing the promise.

That’s exactly what we at Red Oak are working through with our AI Review product. So, I want to share our point of view, some lessons we’ve learned over the past nine months, and how we’re approaching compliance review differently.

Let’s start with where we were.

Rick Grashel

So let’s talk about where we used to be.

AI training in the past was painful. Anyone who has been through it knows: you had to train the AI on every possible scenario, every material type, every supervisory procedure nuance. That meant:

- Tons of consulting hours.

- AI engineers working hand-in-hand with compliance reviewers.

- Reviewers labeling documents and examples on top of their day jobs.

And every time your WSPs changed, your rules changed, or your marketing materials evolved — you had to retrain. Again and again. It was expensive, disruptive, and exhausting.

Why was this the case? Think of it like speed dating.

Older AI models had very small context windows. You could only give them a tiny piece of information at a time, then wait for the next “round” to add more context. Every new round, you had to remind them of what you’d already said. It was inefficient, and the models weren’t smart enough to hold bigger, more nuanced conversations.

That’s why you had to pre-train them so heavily — to anticipate nearly every question and response in advance.

But now things have changed.

The newer models are 10–20x smarter, with much larger context windows. Instead of a speed date, you can now have a long dinner conversation. You can give the model full context, and it can return more complete and meaningful results.

So what does that mean for us?

It means pre-training is over. At least in advertising review, where 95% of materials are public-facing and already baked into the large language models, there’s no need for heavy retraining. The AI already “knows” what financial advertising looks like.

Now let’s talk about Red Oak’s approach.

We call it “disruptive table stakes.” Of course, we want to reduce risk, improve compliance, cut noise, and speed time to market — those are table stakes.

But we’ve gone further:

- We’ve removed IT from the equation.

- We’ve made it easy to use.

- And most importantly, we’ve put control in the hands of the business owners — the compliance reviewers — where it belongs.

To do that, we went “old school.” Think of a submission as a manila folder. Inside it: an intake form, the marketing material, maybe a sticky note or two. That’s the way we used to do compliance review.

We simply digitized that metaphor.

We introduced three concepts:

- Classifications – your sticky notes. Things like “Promissory,” “Not Fair & Balanced,” or “Testimonials.”

- Prompts – groups of classifications that run together. For example, a “FINRA 2210 Prompt” might group “Promissory” and “Not Fair & Balanced.”

- Rules – logic that says which prompts apply to which types of material.

And critically: business owners, not IT or AI engineers, create and manage all of these.

Rick Grashel

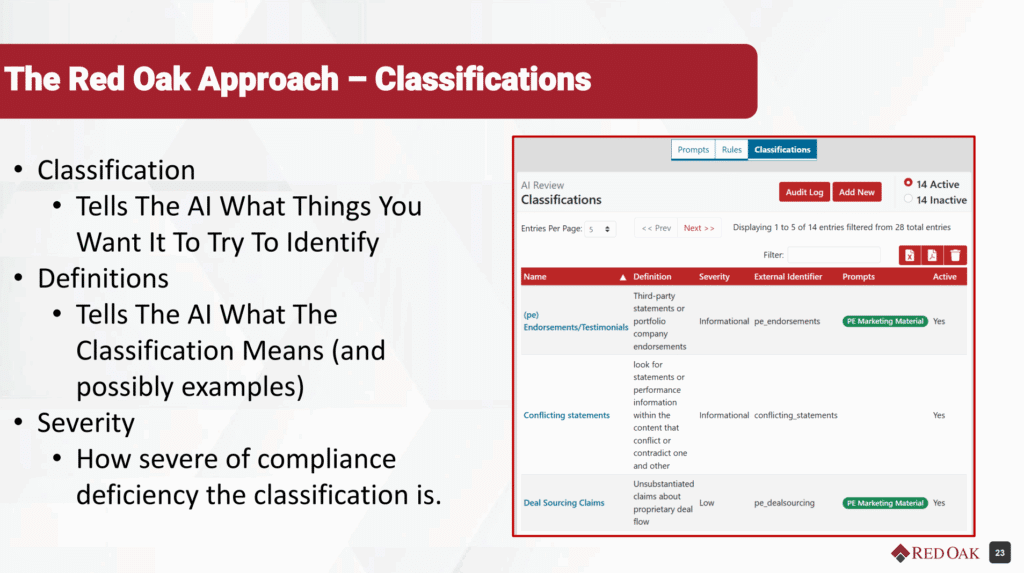

Now let’s look at how this actually works.

Here’s an example screen from our product — a grid view of classifications.

You can see one labeled “Endorsements and Testimonials – PE.” Its definition says: third-party statements or portfolio company endorsements. The system knows that classification applies specifically to private equity marketing materials.

That’s what a classification is: a defined “sticky note” that tells the AI what to look for. The definition matters — it gives the AI more context, like instructions. You can even add examples of what counts and what doesn’t. That makes it sharper.

Classifications also have severity levels. Some findings are informational. Others are low, medium, high, or critical risk. If the AI flags something as “promissory,” for instance, that’s likely a high-severity issue.

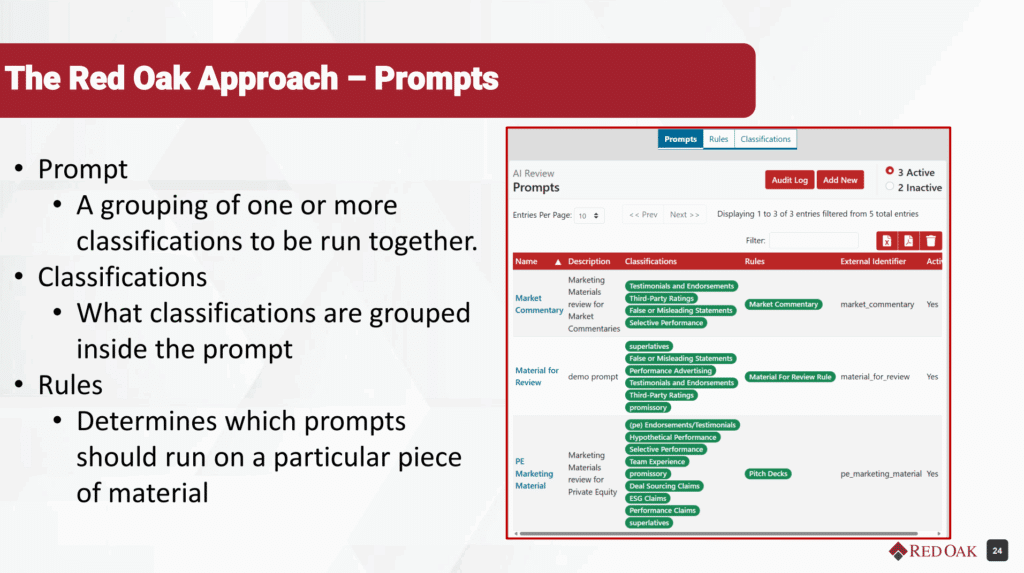

Next, we group classifications into prompts. For example, a “Market Commentary Prompt” or “General Material Review Prompt.” Each prompt has specific classifications attached.

Then, rules tell the system when to use which prompt. For example:

- Use the “OSC Prompt” only for Canadian workflows.

- Never run internal material through certain prompts.

This is where determinism matters. We don’t just say “AI, figure it out.” That’s risky. We tell it exactly when to apply certain compliance rules, so it avoids hallucinations and misclassification.

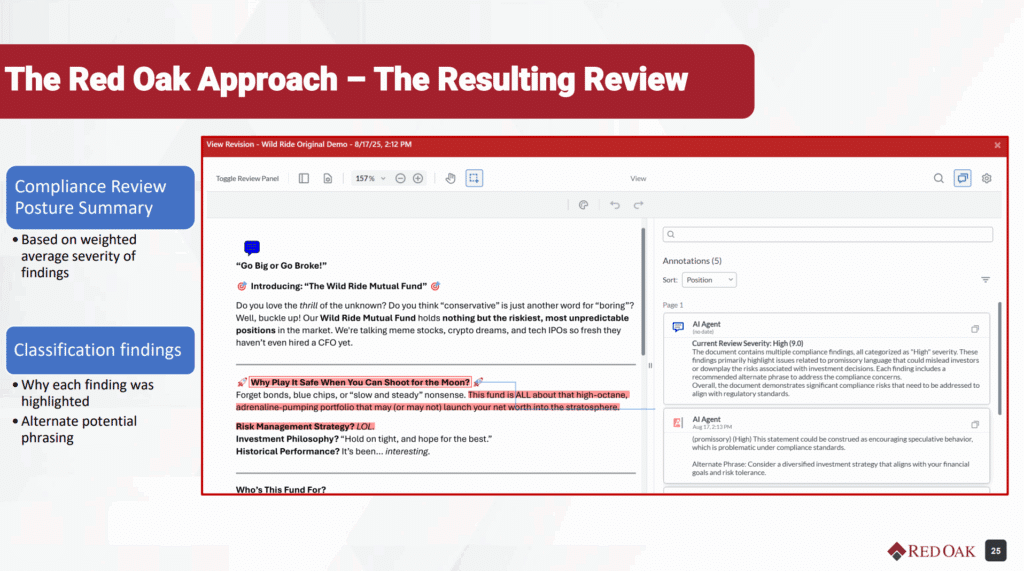

Now, here’s what a real AI review output looks like.

At the top, you see a Review Posture Summary. It’s a weighted severity score of all the findings. In this case, the AI said: multiple high-severity findings related to promissory language. Overall, significant compliance risks.

So right away, the reviewer knows where the piece stands — before even reading a word.

Then you see the findings. Each one lists:

- The classification (sticky note) applied.

- The text from the material that triggered it.

- Why the AI thinks it’s a violation.

- And suggested alternate phrasing.

For example, the phrase “Why play it safe when you can shoot for the moon?” was flagged as Promissory. The AI noted it encourages speculative behavior. It even suggested more compliant phrasing.

Now, marketers may not always love the “puffery” being toned down, but the AI is giving compliance reviewers a head start. Think of it like a spell-checker for compliance. It doesn’t replace the human reviewer, but it speeds them up, improves consistency, and reduces turnaround.

Rick Grashel

Let’s talk about lessons learned from real-world implementations.

First, hallucinations are real. I’ll give you an example. Gemini once produced this statement: “Parachutes are no more effective than backpacks at preventing death or major injury when jumping from an aircraft.”

That’s absurd — but the problem was the study it cited tested jumps from two feet off the ground. So the AI wasn’t lying, but it lacked critical context.

Hallucinations can happen for several reasons:

- Contextual confusion. For example, we tell the AI “don’t allow promissory language.” But it might flag the disclosure “Past performance is not indicative of future results” as promissory, even though that’s required.

- Poorly refined classifications. If your definitions are vague, the AI will misinterpret.

- Using AI as a lexicon. It isn’t one. You can’t rely on it to check corporate trademarks or legal entity suffixes. That’s not what it’s built for.

What works? Use AI for sentiment-based analysis — spotting language that feels promissory, exaggerated, or misleading. That’s where it excels.

Second, take one small bite at a time. Don’t roll out 100 classifications at once. Work iteratively, test each one in production, and refine before adding more. Otherwise, you’ll create “classification collisions” where rules contradict each other.

Finally, remember — you’re never done. This is a continuous improvement cycle.

- Models keep improving.

- We’re refining the back-end logic based on live client feedback.

- We’re adding reporting to show which classifications are working well, and which need adjustment.

Upcoming features will strengthen this further:

- The ability to hide AI reviews from submitters (so only reviewers see them).

- An AI Vision Agent to analyze images for compliance risks.

- An AI Transcription Agent to process audio/video files, generate transcripts, and review them for compliance.

The key is to treat this as an evolving partnership between business owners and the technology. Done right, AI becomes a force multiplier — reducing noise, improving quality, and giving reviewers time to focus on what really matters.

Jamey Heinze

All right, thank you very much, Rick. That was a lot of good stuff.

I’m a little taken aback by your suggestion that marketing might use puffery, and I’m going to fight you for it later — so stay tuned for that.

Now we get to the part that many people have been waiting for: introducing Cassie Beswick. She’ll share her perspective on the journey that Manulife John Hancock went through in evaluating AI vendors, and she’ll give feedback on what’s working in practice.

So, Cassie, please take it away.

Cassie Beswick

Thanks so much, Jamey.

I want to start by echoing something Rick said — I’m primarily a compliance reviewer, not an IT professional. I know about as much AI as most of you, so when I speak today, it’s from the same place you’re coming from.

That said, I think we’ve been really successful in our AI implementation so far, and I hope I can share some insight into how we got here.

For context: my team is responsible for Global Wealth and Asset Management compliance — that’s your “GWAM,” Jamey. We oversee business lines across North America, Asia, and Europe, so we have to account for multiple regulatory jurisdictions. That’s been one of our biggest challenges with AI vendors: many of them are built just for U.S. use cases, or at most U.S. and Canada. Global adaptability is essential for us.

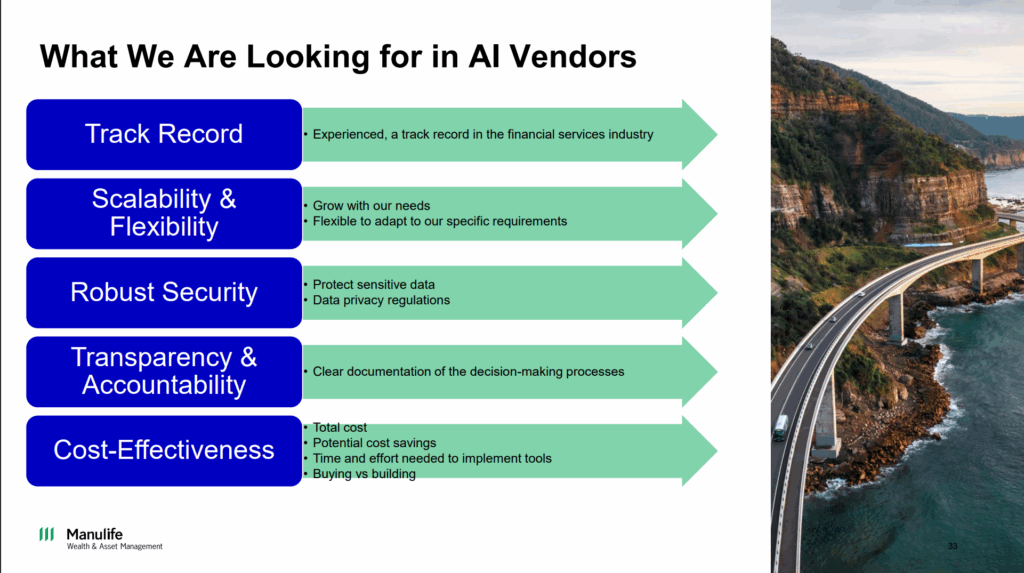

If you didn’t attend our January webinar or the Red Oak conference where my colleague Derek Stern spoke, let me summarize what we prioritize when evaluating AI vendors:

- Track record. We need to trust that a vendor will protect our data and has credibility in the industry.

- Understanding of compliance. They must know our process and business objectives, not just sell generic tech.

- Flexibility. The ability to adapt to jurisdiction-specific requirements across multiple regions.

- Transparency. We need visibility into how the AI is making decisions to meet regulatory expectations.

Those four areas guided our vendor selection and continue to shape how we approach AI adoption.

Cassie Beswick

When we thought about integrating AI into our compliance workflows, a few key factors were top of mind:

- Integration. Is it easy to use? Does it fit naturally into our existing process without adding extra steps or requiring another system?

- Customization. Can we adapt it to different business lines and regions?

- Accuracy. False flags are a huge problem. If reviewers and business users see too many irrelevant flags, they lose trust and stop using the tool.

- Consistency. This has been one of the biggest wins for us. Previously, five or six human reviewers might give slightly different feedback. AI provides a consistent baseline of compliance checks across every document.

- Efficiency. Our submission volumes keep going up. We need tools that help reviewers focus on nuanced, higher-value feedback instead of spending time correcting simple issues like outdated sources or missing disclosures.

Our ultimate goal with Red Oak’s AI review tool was to enhance human review. We wanted AI to help submitters clean up their materials before they hit compliance, so compliance reviewers could focus on complex or judgment-heavy issues.

By doing this, we’ve seen:

- Faster turnaround times.

- Cleaner submissions.

- Compliance reviewers applying their expertise where it matters most.

For us, AI isn’t about replacing people — it’s about freeing them to do the work that requires human judgment.

Cassie Beswick

As I mentioned, we were stuck in pilot mode with other vendors for years. A few consistent challenges came up:

- Customization limits. Many tools could only flag issues across all workflows or none. For a global firm, that doesn’t work. We needed rules that could, for example, apply a specific Canadian OSC requirement only on Canadian workflows, not across the U.S. or Asia.

- Jurisdiction blind spots. Several vendors only supported U.S. regulations, or at most U.S. and Canada. That left us with huge gaps in Europe and Asia.

- Rigid implementation. Some tools required “all or nothing” adoption, which caused irrelevant flags and slowed reviewers down instead of helping them.

Red Oak’s AI review tool solved these pain points. With its prompts, classifications, and rules, we can:

- Run the right checks only on the right workflows.

- Exclude internal documents automatically.

- Tailor compliance checks region by region.

This flexibility has made a massive difference. It means the AI outputs are relevant, valuable, and trusted — which is exactly what we needed.

Cassie Beswick

When Derek spoke at the Red Oak conference earlier this year, we were still in the pilot stage. To give you an update on the timeline:

- We now have the Red Oak AI tool live on 10 workflows, with 4 more in development.

- The rollout has been iterative — workflow by workflow, region by region. Starting small was key.

What worked well for us:

- Collaborative setup. We shared feedback with the Red Oak team about our struggles with other vendors. They helped us design prompts, classifications, and rules that truly fit our business.

- Personalized support. For example, we created rules that ensure OSC-specific flags only run on Canadian workflows, and rules that exclude internal material from scanning.

- Seamless integration. Submitters don’t need to click anything extra or go to a new system. They follow the same submission process, and the AI results are generated automatically.

The result:

- Submitters get instant AI feedback and can make corrections before human review.

- Compliance reviewers now focus on nuanced issues, instead of simple fixes like “add disclosure” or “update source.”

- We’re already seeing a reduction in review rounds, which is the efficiency gain we were aiming for.

Looking forward, we’re particularly excited about new Red Oak features like AI-driven transcription for audio and video files. For example, reviewing an hour-long podcast will be much easier with a transcript and AI scan upfront.

Jamey Heinze

Cassie, your spidey sense was perfect. Somebody just asked: How difficult was the implementation process?

Cassie Beswick

Great question. At first glance, it can feel daunting. But we approached it iteratively:

- Start small. We began with the most critical regulations — the ones we always want flagged — and applied them to specific workflows.

- Test in stages. We ran scans on documents in progress, approved documents, and a variety of sample pieces to refine prompts and classifications.

- Launch in phases. We rolled out a first “round one AI” version, then collected feedback from submitters and compliance reviewers.

- Refine continuously. Now that we have about a month of active data across 10 workflows, we’re analyzing both AI and human comments. We’re identifying new flags to add and fine-tuning existing ones.

The Red Oak team was a strong partner throughout this process — not just with technical setup, but also with language, rules, and customization. That collaboration made the rollout much smoother.

Jamey Heinze

That’s great. Thank you, Cassie, and thank you for offering to talk with your colleagues here if they have additional questions.

We have one more question, and Rob, I bet you could talk about this for hours. But we’re going to ask you to do it in 30 seconds. Based on your perspective across the industry and seeing how AI is evolving, what do you think is the next big thing we should be paying attention to?

Rob Dearman

Jamie, 30 seconds — that’s tough. But here’s the short version: The number one AI use case I’m asked about today is Next Best Action (NBA).

Firms — whether PE-backed, VC-backed, or insurance-owned — all want to know how to:

- Grow wallet share.

- Expand services delivered to clients.

- Move into higher-value markets like high-net-worth or family office services.

In the old “big data” era, we had lots of predictive analytics but not much action. With AI, especially when paired with clean data, NBA can deliver real actions.

Large firms like Morgan Stanley and Envestnet are already piloting NBA for cross-selling, expanding client relationships, and training the next generation of advisors with practical, in-context guidance.

That’s the big one to watch.

Jamey Heinze

All right, Rob — that was 33 seconds, but we’ll give you the win.

We are at the top of the hour. Someone asked earlier if we’ll be sending out the presentation — the answer is yes. We’ll share the recording, just like we always do. Our goal is to provide useful, actionable information for our community as you evaluate artificial intelligence.

On your screen, you’ll see links to additional resources — all available on the Red Oak website. Those include related webinars, white papers, and other tools to support your compliance teams as you explore AI.

With that, I want to thank all of our panelists — Rob, Rick, and Cassie — for their insights and candor. And thanks to all of you for your engagement and thoughtful questions.

We wish everyone a wonderful rest of the day.